The Fokker-Planck forward equation is an important tool to calibrate local volatility extensions of stochastic volatility models, e.g. the local vol extension of the Heston model. But the treatment of the boundary conditions – especially at zero instantaneous variance – is notoriously difficult if the Feller constraint

of the square root process for the variance

is violated. The corresponding Fokker-Planck forward equation

describes the time evolution of the probability density function p and the boundary at the origin is instantaneously attainable if the Feller constraint is violated. In this case the stationary solution [1] of the Fokker-Planck equation

diverges with

and the zero flow boundary condition at the origin

becomes hard to track numerically. Rewriting the partial differential equation in terms of helps to mitigate the problem [2].

The corresponding zero flow boundary condition in q reads as follows

The first step in order to apply the boundary condition is to discretize the transformed Fokker-Planck equation in space direction on a non-uniform grid

with

Next step is to discretize the zero flow boundary condition using a second order forward discretization [3] for the derivative .

with

Now the value at the boundary

can be expressed in terms of

and

.

This equation can be used to remove the boundary value from the discretization of the transformed Fokker-Planck equation. In the same manner the zero flow boundary condition can be implemented for the upper boundary

and for the original Fokker-Planck equation.

The following parameters of the square-root process will be used to test the different boundary conditions.

The uniform grid has 100 or 1000 grid points and the stationary solution

of the Fokker-Planck equation is chosen as the start configuration on the grid points. The boundaries

are defined via the inverse cumulated distribution function such that

.

The grid covers 98% of the distribution and this value would not change over time if the boundary conditions are satisfied without discretiszation errors. To test the magnitude of the discretization errors we let the initial solution evolve over one year using 100 time steps with the Crank-Nicolson scheme. The resulting solution is interpolated using cubic splines to get the function

. The value

servers as a quality indicator for the discretization errors of the boundary condition.

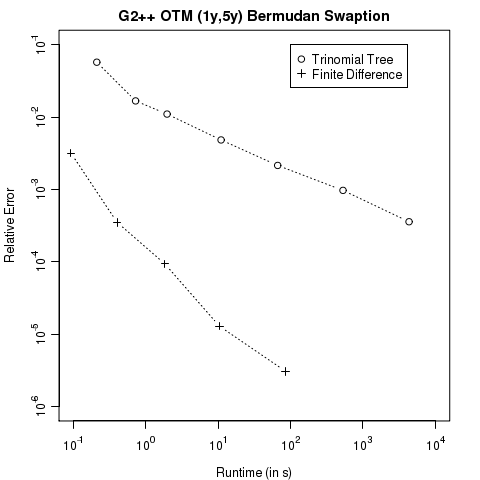

As can be seen in the diagram below if is small then the original Fokker-Planck equation shows smaller discretization errors than the transformed Fokker-Planck equation in

. But if

becomes larger and especially if the Feller constraint is violated then the solution of the transformed Fokker-Planck equation clearly outperforms the original solution. As a rule of thumb based on this example one should use the original Fokker-Planck equation if

and the transformed equation otherwise.

The same techniques can be used to solve the Fokker-Planck equation for the Heston model. The code for the numerical tests is available in the latest QuantLib version from the SVN trunk. The diagram is based on the test FDHestonTest::testSquareRootEvolveWithStationaryDensity.

[1] A. Dragulescu, V. Yakovenko, Probability distribution of returns in the Heston model with stochastic volatility

[2] V. Lucic, Boundary Conditions for Computing Densities in Hybrid Models via PDE Methods

[3] P. Lermusiaux, Numerical Fluid Mechanics, Lecture Note 14, Page 5